I thought it would be fun to test Google Lens and see what it can recognize. This is not a formal test, very random as a matter of fact. It’s currently in beta for users, but I just had to share some of the results. It’s available in version 3.9 of Google Photos. So be on the lookout. If you want more info on Google Lens see this article for Google news.![]() All in all, the machine learning behind Lens is amazing, but it generated some funny results too. Most of the images I show below are the bloopers and show funny results, but it got just as many right. It was actually a lot of fun to run different photos through Lens. Since I have a preview version, the public release may yield different results. I’m still trying to figure out their algorithms to describe an object or photo.

All in all, the machine learning behind Lens is amazing, but it generated some funny results too. Most of the images I show below are the bloopers and show funny results, but it got just as many right. It was actually a lot of fun to run different photos through Lens. Since I have a preview version, the public release may yield different results. I’m still trying to figure out their algorithms to describe an object or photo.

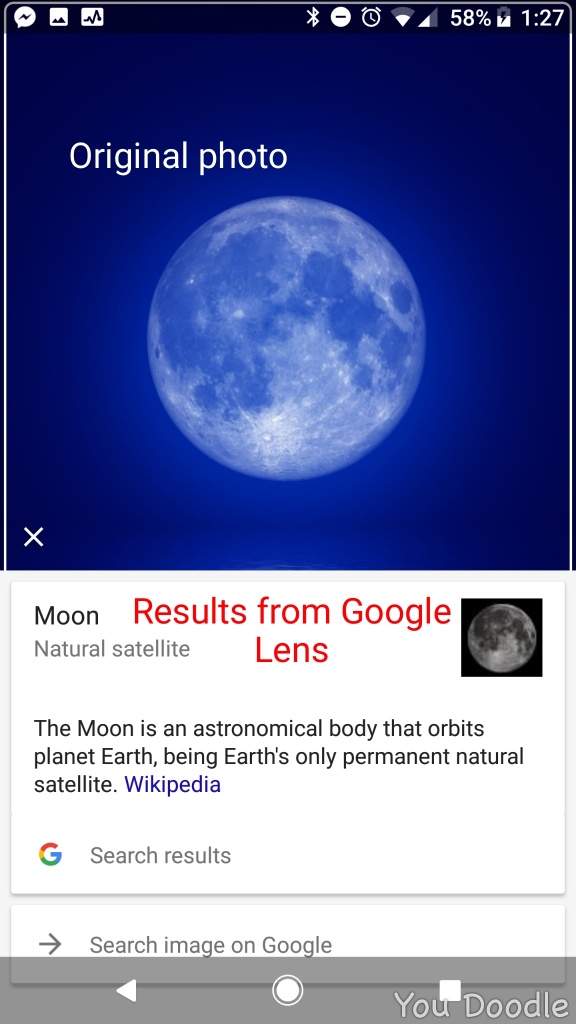

Note: On each of the screen grabs the Original photo is on top, and then the bottom half is what Google Lens thinks it is.

EXAMPLE SCREEN GRAB

Here are screen grabs from photos run through Google Lens, with insights directly below.

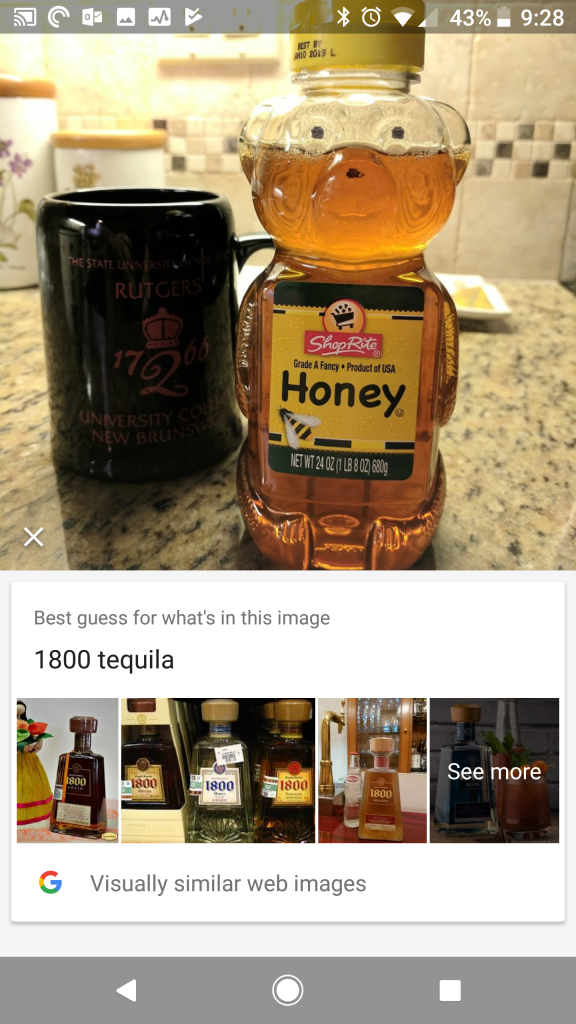

I’m starting with the funniest one. So instead of reading the text that it was honey, it viewed the color and shape to be tequila. LOL

I’m starting with the funniest one. So instead of reading the text that it was honey, it viewed the color and shape to be tequila. LOL

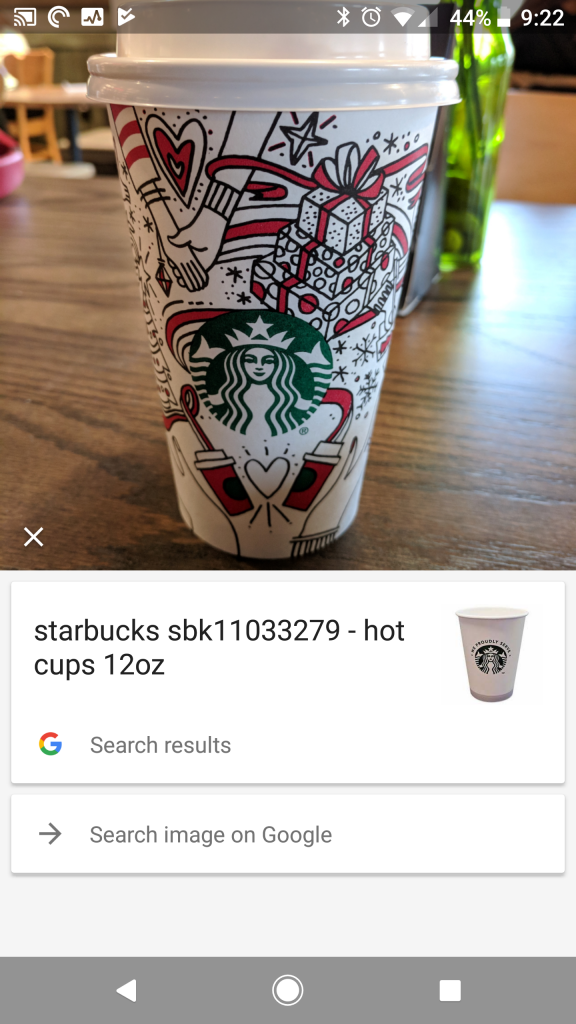

So, Lens basically got the Starbucks cup correct. Even pulled up a stock number. But turn the cup a little bit and forget it.

So, Lens basically got the Starbucks cup correct. Even pulled up a stock number. But turn the cup a little bit and forget it.

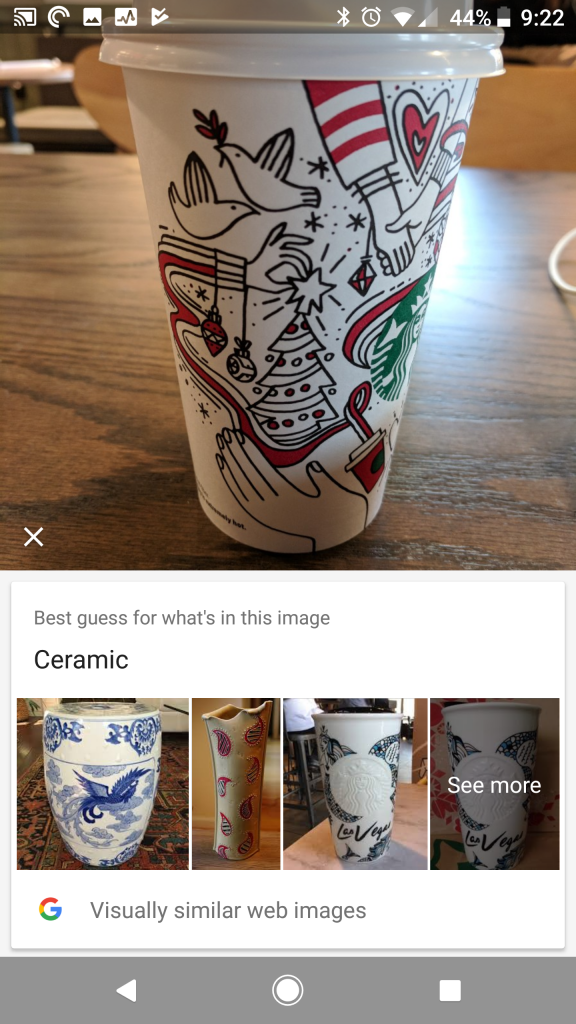

It’s a ceramic

It’s a ceramic

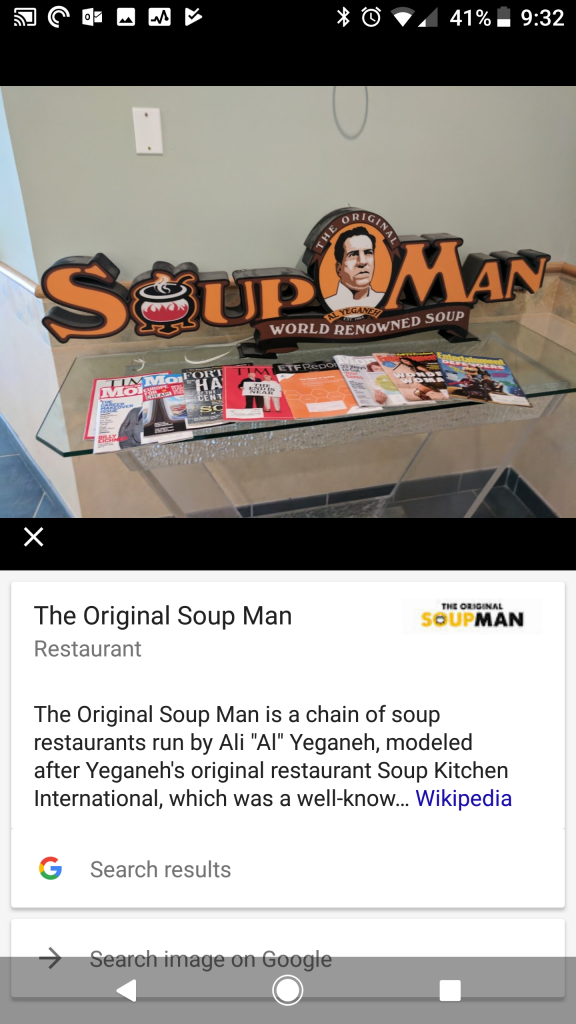

And yet it read this crazy type correctly.

And yet it read this crazy type correctly.

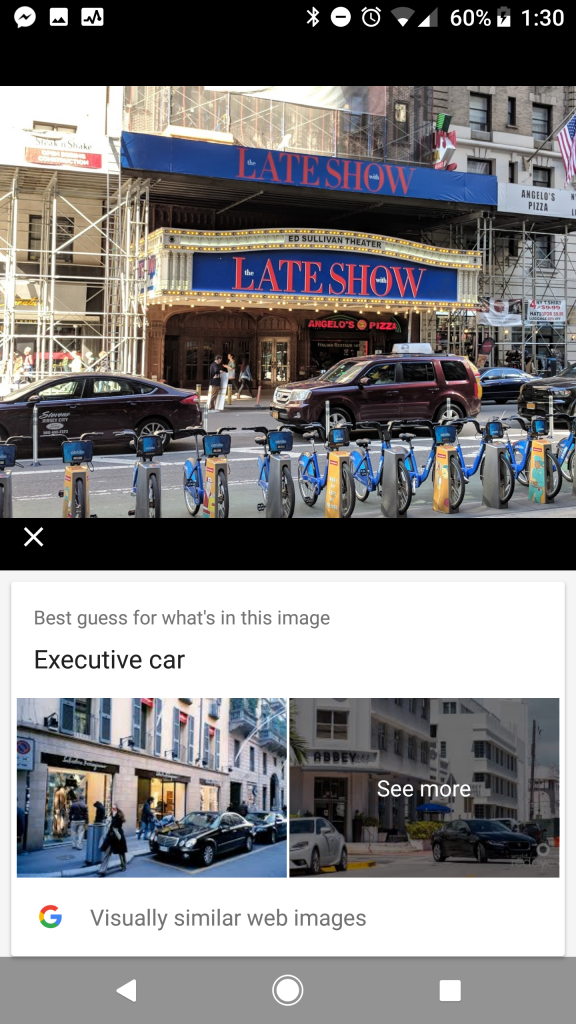

So I thought Lens would read the text first, but it went for the car instead.

So I thought Lens would read the text first, but it went for the car instead.

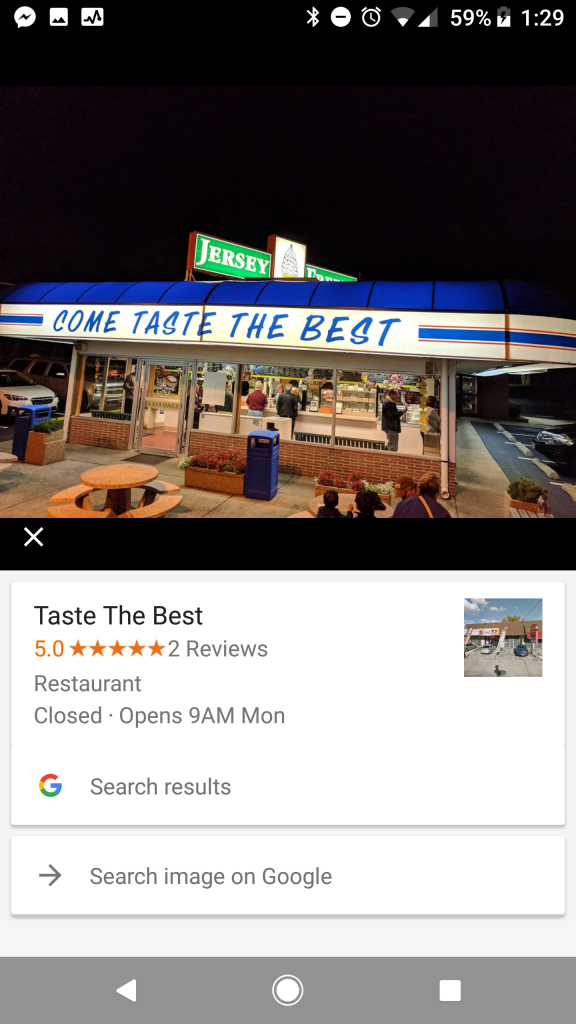

So, at first glance this looks correct, since it read the text. However, the name of the place is “Jersey Freeze” in New Jersey, not “Taste the Best” located in Jamaica NY.

So, at first glance this looks correct, since it read the text. However, the name of the place is “Jersey Freeze” in New Jersey, not “Taste the Best” located in Jamaica NY.

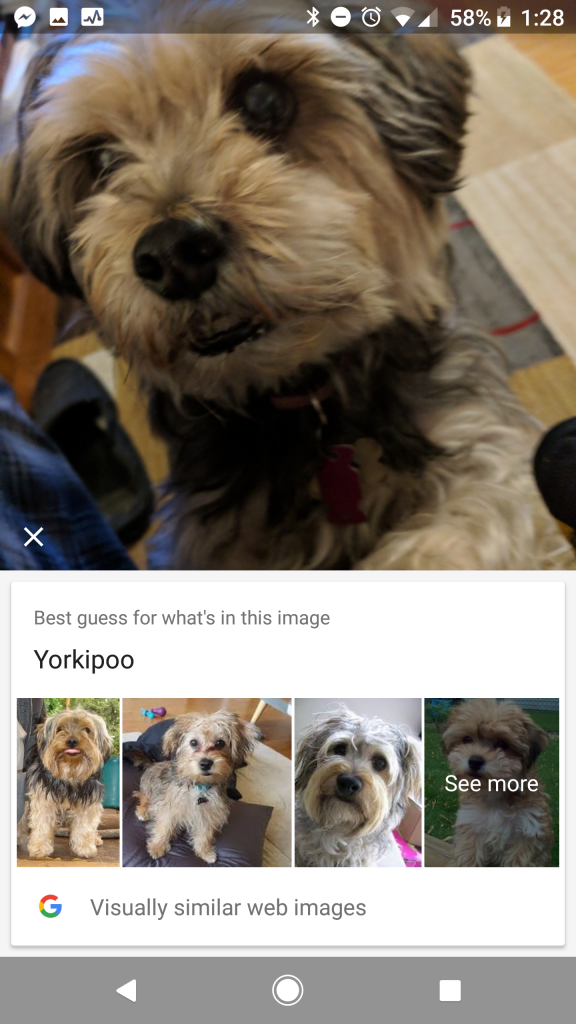

The old dog picture was pretty accurate. Our dog is a Yorkshire. Not sure if she’s a mixed breed, but you never know.

The old dog picture was pretty accurate. Our dog is a Yorkshire. Not sure if she’s a mixed breed, but you never know.

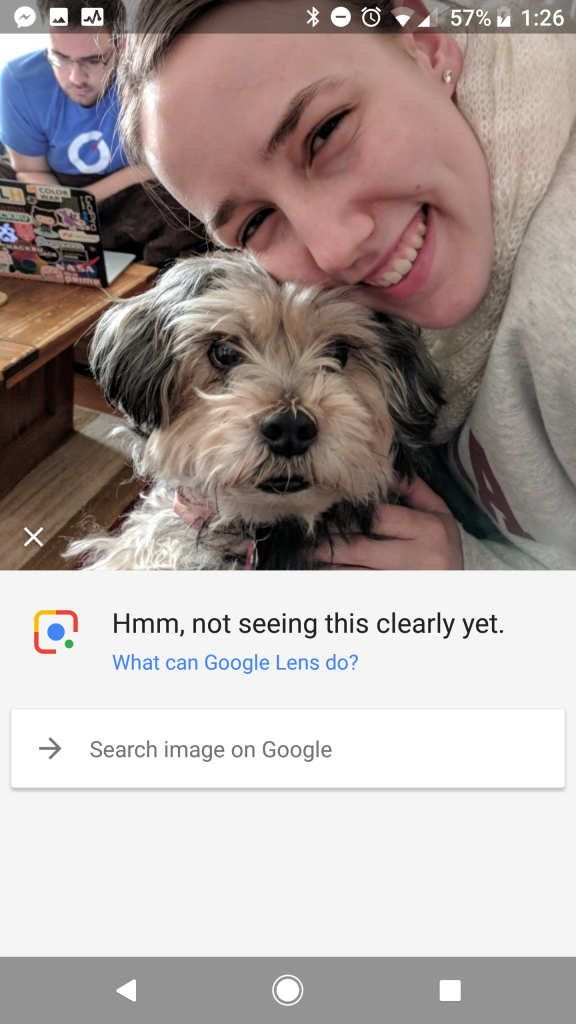

But now our same dog is in the picture and Lens is stumped. I’m surprised at this result. It knew the dog before and Google knows my daughter. It identifies her out all the time when I search for her image.

But now our same dog is in the picture and Lens is stumped. I’m surprised at this result. It knew the dog before and Google knows my daughter. It identifies her out all the time when I search for her image.

This is a hit and miss. It got the Starbucks right but it’s a iced coffee, and its not gold deco flakes. I’m surprised it even guessed at the type of drink.

This is a hit and miss. It got the Starbucks right but it’s a iced coffee, and its not gold deco flakes. I’m surprised it even guessed at the type of drink.

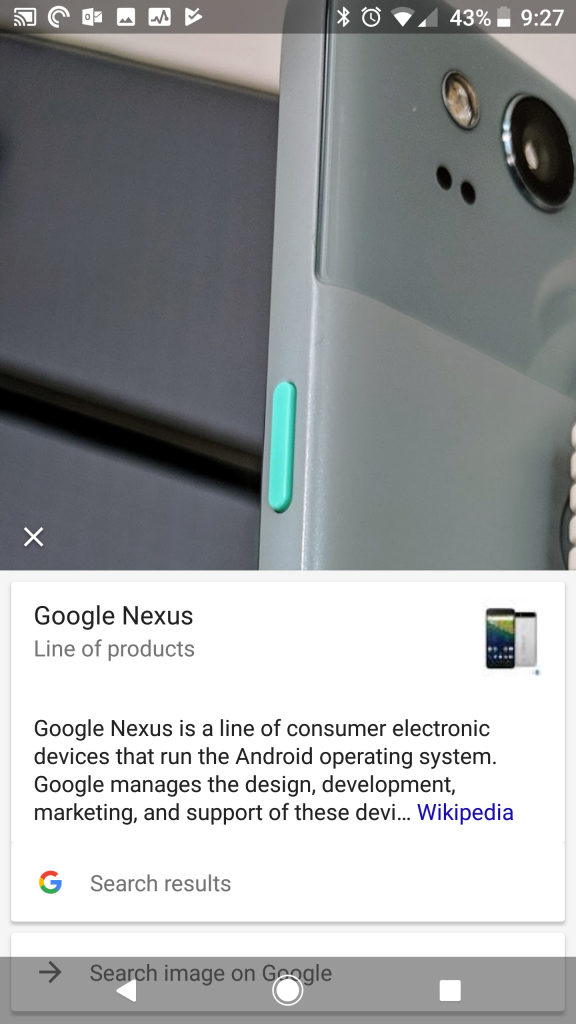

Funny that it says the Google Pixel 2 is a nexus. I mean the green button is so unique.

Funny that it says the Google Pixel 2 is a nexus. I mean the green button is so unique.

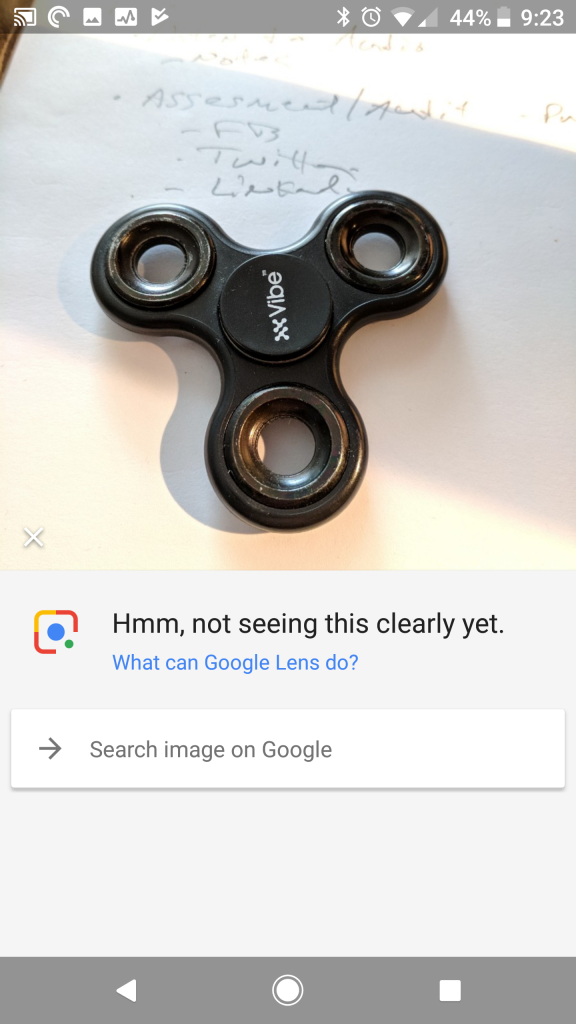

How could it not know a fidget spinner?

How could it not know a fidget spinner?

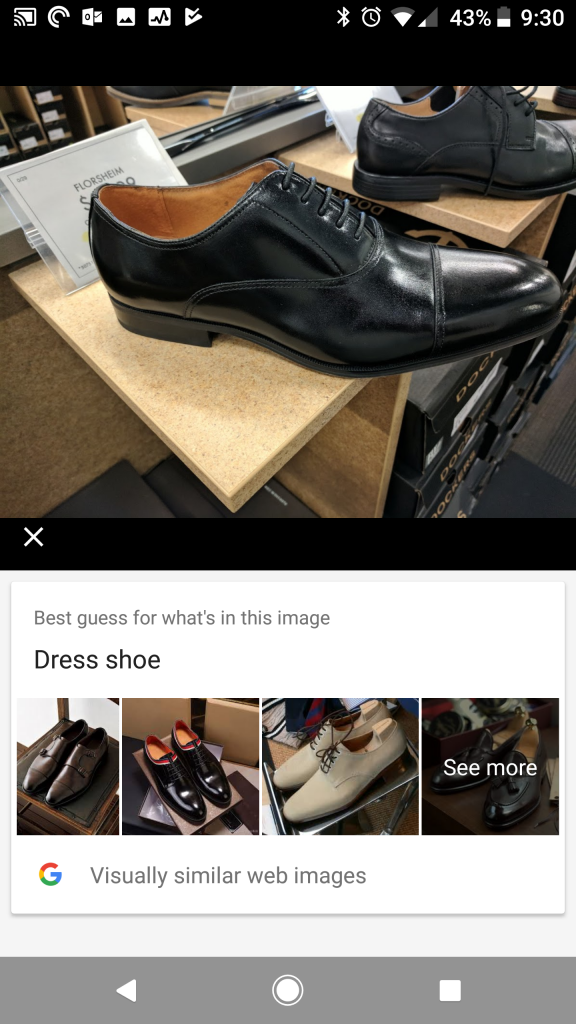

So it got the shoe. But why doesn’t Lens use my location to even go further and say which store it’s from? Just saying.

So it got the shoe. But why doesn’t Lens use my location to even go further and say which store it’s from? Just saying.

Again, everyday product that it didn’t recognize.

Again, everyday product that it didn’t recognize.

It knows Apple products, even without the logo.

It knows Apple products, even without the logo.

And it knows Google products too – thank goodness.

And it knows Google products too – thank goodness.

This software is fun right now. Soon it will be useful. The algorithms will be finessed and the machine learning will get better. Having the ability to look at an object and describe that the item, is going to be a big deal – soon. Look for the general release soon.